a catalog is used, which captures the metadata and provides a query interface for all data assets.

Unified governance

The data lake design should have a strong mechanism for centralized authorization and auditing of data. Configuring access policies across the data lake and across all data stores can be extremely complex and error-prone. Having a centralized way to define policies and enforce them is critical to a data architecture.

Transactional semantics

In a data lake, data is often ingested almost continuously from multiple sources and queried simultaneously by multiple analytic engines. Having atomic, consistent, isolated, durable (ACID) transactions is critical to maintaining data consistency.

Please read the first article before continuing reading this article for a better understanding.

Reference Model for Big Data Architecture

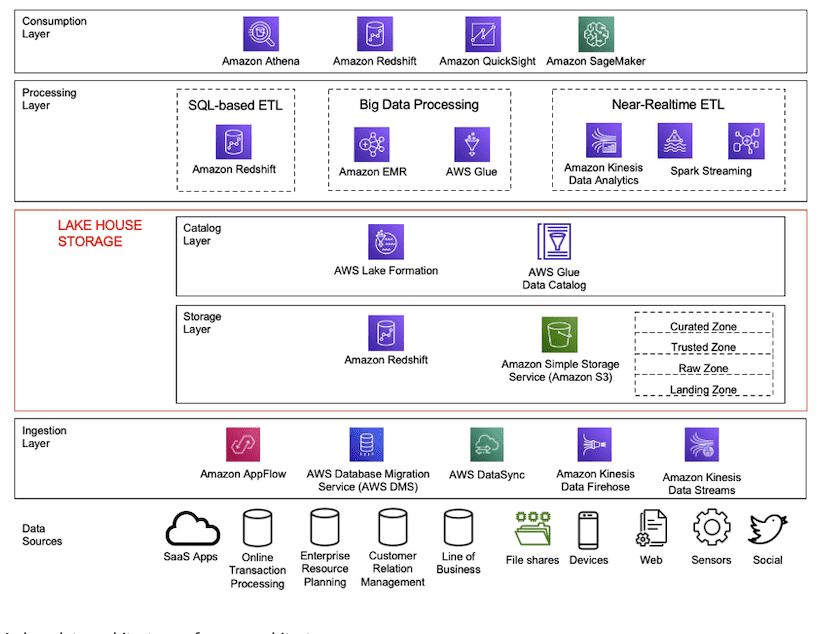

The proposed big data architecture is based on the reference model powered by AWS (see Figure 2). In this reference model, the following layers are presented from bottom to top.

Figure 2: AWS Reference Model for Big Data Architecture

Data sources

including software as a cloud computing service (SaaS), Online Transaction Processing databases, Enterprise Resource Planning databases, Customer Relation Management databases, the databases of each line of, shared files, mobile devices, web pages, sensors and social networks.

Ingestion Layer

In this layer AWS suggests the use of DMS (Database Migration Services) that allows an initial loading of the data and marking a point of change (change data capture CDC) and keeping the extractions updated from this point, AWS DMS can send the data to AWS Kinesis for writing to the S3 distributed file system through AWS Kinesis Firehose, or stream it for further processing to AWS Kinesis Data Streams. Flow control of ingest processes is orchestrated through Amazon AppFlow and kept up-to-date using AWS DataSync.

Storage layer (Lake House Storage)

This is the layer where the data lake is made up of different buckets of S3 (AWS distributed file system), these buckets can be in different accounts and in different regions, the important thing is that AWS proposes to classify data that is written to buckets into:

- Landing Zone: it is the zone in which the clean, anonymized, hashed data is found, and structured in a data model so that it can be interpreted by a business person and not a technical person and this data can come from different user AWS accounts.

- Raw Zone: in this zone the data is placed just as it comes from the different sources, without any treatment. The data in this zone is normally found in a secure environment.

- Trusted Zone: it is the zone where transformations are made to the data, for the necessary aggregations in the different analytical engines and for their subsequent security treatment.

- Curated Zone: in this zone all the mechanisms to comply with PII (personally identifiable information) and with the Payment Card Industry Data Security Standard are applied

Catalog management layer (Lake House Catalog)

The catalog of all the data that is in the data lake is managed through AWS Glue, with its schemas and the different versions of each data structure and with AWS Lake Formation data access permissions are managed, by schema, tables and even columns according to the roles defined in AWS IAM.

Processing layer

This layer is divided into three sublayers:

- Datawarehouse (SQL-based ETL): sublayer where the fact tables (facts) and the dimensions (dim) that are updated through queries made on the different databases and even on the data lake are stored, this layer it is implemented using Amazon Redshift and is the source for the analytics engines (superset, Amazon QuickSight, tableau, power bi, etc).

- Big Data Processing: in this sublayer all the aggregation operations are carried out through the implementation of the mapping algorithm and reduction of the Apache Spark distributed computing framework, the use of servers that can be turned on and off for each calculation is called AWS Elastic Map Reduce (Amazon EMR) and the particular way to use Apache Spark with specific benefits for the AWS platform is called AWS Glue, in Glue workflows are declared that invoke jobs that contain the programs in python or python for apache spark (pyspark) that perform distributed computing and crawlers that read the data in one or several directories and automatically detect the structure and type of data and generate tables in the glue catalog, even recognizing partitions by year month day hour ( format that AWS Kinesis Firehose uses by default to write data to S3).

- Near to Real Time ETL: Through the implementation of Apache Spark Streaming and Kinesis Datastream, the data can be read from the source in near real time and can be processed in memory or written to S3, if the processing is done in memory AWS Kinesis Data Analytics can be performed to perform summarization, aggregation and send the data, for example, to the feature store that feeds the machine learning models.

Consumption layer

In this layer, Amazon Athena is used as a tool to perform SQL queries against the Glue catalog tables that are connected to the data lake and other data sources, Athena allows to establish workgroups that have quotas for perform queries that are executed using distributed computing on S3, Amazon Redshift can also be used to query the Datawarehouse tables, Amazon QuickSight to perform analytical reports and Amazon Sagemaker to perform machine learning models.

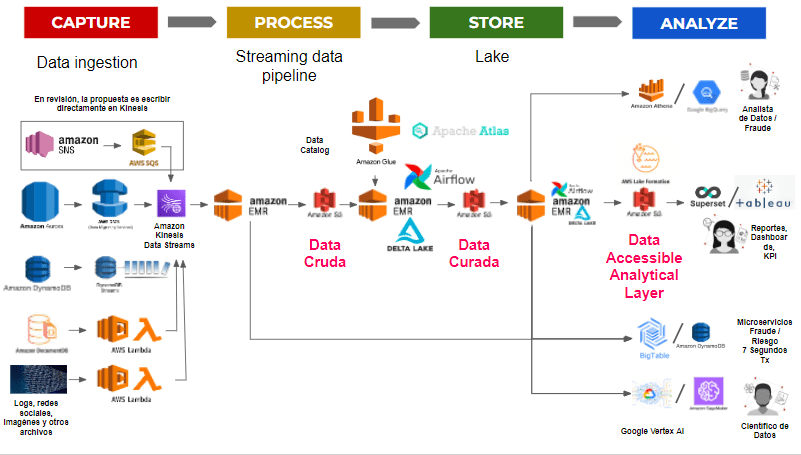

Figure 3: Proposed big data architecture with corresponding products