One of the big mistakes we make when dealing with a subject is to see only the part that corresponds to us and not try to acquire a broader vision of the subject, this is what systemic thinking calls a forest view and not just a tree view. This is especially important when you manage big data.

Eighteen years ago with the first article published by Doug Cutting defined most of the ideas of how to manage big data: distributed file systems, mapping and reduction algorithms, search engines, companies and universities began to face the issue from isolated perspectives: databases, data science, data analytics, data governance.

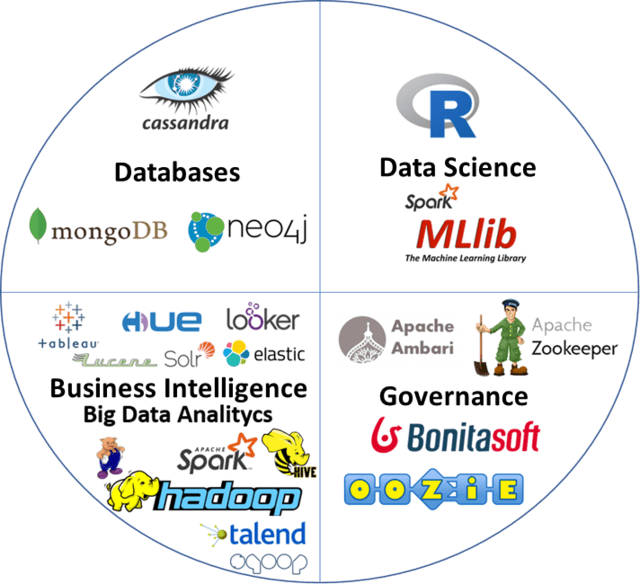

The truth is that today we can talk about Big Data as a new paradigm that covers all topics according to a set of common requirements: volume, variability, speed and accuracy. When we talk about Big data management, it is necessary to consider these 4 aspects equally: (1) Database; (2) Big Data Analytics or BI; (3) Data Science; and, (4) Governance. (see, Figure 1).

Figure 1: Data Management software

Optimization of database management

In the first aspect, the issue of databases is addressed. The model presented by Edgar Frank Codd, which has allowed us to store the data as a matrix of rows and columns; where for each new attribute a new column is created, despite the fact that many rows do not have values for that new attribute, it has only allowed organizations to manage 30% of their data, according to research by the Garner Group.

This is why a new concept has emerged to store and manage data called key-value. In this way, different rows can have different attributes. There are mainly 3 types of databases that are key value in Big Data and there is at least one free software product, which in the last 5 years I have successfully tested and installed and configured on production clients:

- 1.- Transactional with high availability = Apache Cassandra

- 2.- Documentary management = MongoDB

- 3.- Georeference = Neo4J

Big Data Analytics

The term Business Intelligence has evolved in the context of Big Data as Big Data Analytics. Where you do not necessarily work with the database concept, but rather with a distributed file system.

This file system continues to be populated through data extraction, transformation, cleaning and loading tools. But now these tools write in the new key value format (Avro, Parquet, ORC). The forerunner of these distributed file systems was Google File System (GFS), which evolved into what is now known as Hadoop Distributed File System (HDFS), or simply Apache Hadoop.

Apache Haddop

This new type of file system uses the concept of distributed computing, in order to be able to process large amounts of data (more than a million records, with high variability and with queries in less than 5 seconds), between several servers.

The first concept that hadoop introduces is the creation of an Active High Availability Cluster, with a file name server (Name Node) and several servers where the files reside (Data Node). Hadoop then splits a file into chunks (churn) and replicates it between as many Data Nodes as configured. This also allows a horizontal scalability of the architecture, according to the needs of the client.

Once the data is stored in the HDFS, the next component is the map reduce algorithm, this algorithm allows mapping the data and reducing it, to write its reduction and not the data as such (it is like compressing with the zip or rar algorithm). In such a way that queries can be made on the reduced (compressed) data. The version of the map reduce algorithm that ships with hadoop is called YARN (Yet another resource negotiator).

Apache Spark

An optimized version of the algorithm, to handle distributed computing clusters, through apache mesos, is Spark. Thus, currently, most organizations use spark to schedule programs and divide tasks of summarization, grouping, selection, on the data that is stored in the HDFS. Apache Spark allows you to manage map reduce tasks on top of HDFS. However, to be able to use spark it is necessary to write a program in JAVA, Python, SCALA, etc.

This program commonly loads the data that is in the HDFS, within a resilient distributed dataset (RDD) type structure, which is nothing more than a collection of elements that are partitioned within the cluster nodes and can be processed in parallel; or a data frame (rows and columns) and then performs SQL queries on the structures, to finally write the result in the same HDFS or in another Relational Database or a CSV file, for example to be consumed by some data visualization tool reports such as Tableau, Hadoop User Experience (HUE) or Looker.

Hive

Taking into account that, to perform a query on the data in the HDFS, a program would have to be made; It was necessary in the Hadoop community to establish another mechanism in which database administrators and functionals continue to perform SQL queries, this mechanism is HIVE.

Hive is a translator, interpreter of SQL statements in Map Reduce algorithms that are scheduled with Yarn or Spark on the data in the HDFS. In such a way that direct queries can be made on the data in hadoop.

Hive has a metastore, which can reside in a relational database in memory or in an object relational database such as PostgreSQL, this metastore stores the link between the metadata with which the tables are created and the data in hadoop that through partitions you can populate these tables, but the data actually resides in hadoop.

In the event that it is necessary to automate a series of queries (in the style of a store procedure), Apache Pig can also be used, which is a tool that uses a procedural language for automating queries in Hadoop. It is important to mention that the data in hadoop is not indexed, so the Lucene API is also used through SolR or ElasticSearch to place the indexed data in information cubes that can later be consulted through web services, for reporting applications or by other applications in the organization.

Finally, Big Data Analytics requires multidimensional report presentation and analysis tools, such as superset, tableau, looker and hue (hadoop user experience).

Data Science

The identification of patterns through statistical models, in large volumes of data, either to establish the probability of an event occurring (regression model) or to establish a characterization (classification model) it’s called Data Science.

To perform Data Science, the object-oriented tool R Studio is commonly used, with an implementation of the R Language, which is an evolution of the S language, used in the 90s in most computer-assisted statistical studies.

R Studio, allows performing structural analysis and transformations on a set of data:

- Quantitative analysis using descriptive statistics,

- Identify variables to predict and the type of model (regression or classification),

- Separate the data set into train (data for model training) and test (data for testing the model)

- Perform analysis using decision trees and regression to indicate the importance of attributes

- Perform attribute engineering

When it is required to apply a model to a large amount of data, it is necessary to store them in Apache Hadoop and make a program to execute it with Spark, which has all the functionality of R Studio through SparkR of learning machines with the MLib library.

Governance

In Big Data, Governance is achieved through the modeling and implementation of eight processes: (1) Data operations management; (2) Master data and reference management; (3) Documentation and content management; (4) Big Data security management; (5) Big Data Development; (6) Big Data quality management; (7) Bid Data Meta-Data Management; and, (8) Management of data architecture Data Warehousing & BI Management.

Figure 2: Big Data Quality Governance

In another publication I will be addressing each of these processes. For the automation of these.In conclusion, it is necessary when dealing with the topic of Big Data, to systematically address all aspects and not neglect any of them.